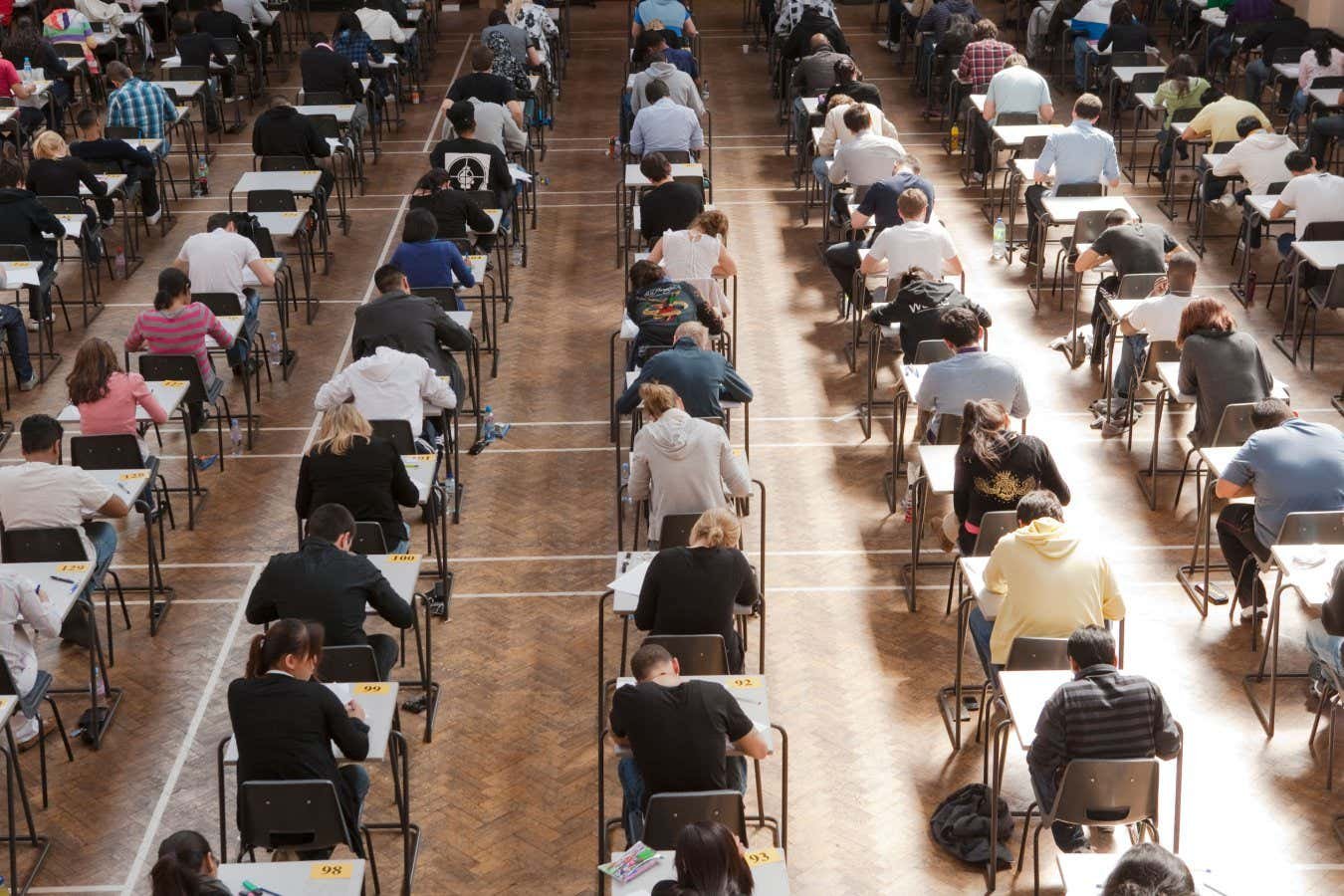

Exams taken in person make it harder for students to cheat using AI

Trish Gant / Alamy

Ninety-four per cent of university exam submissions created using ChatGPT weren’t detected as being generated by artificial intelligence, and these submissions tended to get higher scores than real students’ work.

Peter Scarfe at the University of Reading, UK, and his colleagues used ChatGPT to produce answers to 63 assessment questions on five modules across the university’s psychology undergraduate degrees. Students sat these exams at home, so they were allowed to look at notes and references, and they could potentially have used AI although this wasn’t permitted.

The AI-generated answers were submitted alongside real students’ work, and accounted for, on average, 5 per cent of the total scripts marked by academics. The markers weren’t informed that they were checking the work of 33 fake students – whose names were themselves generated by ChatGPT.

The assessments included two types of questions: short answers and longer essays. The prompts given to ChatGPT began with the words “Including references to academic literature but not a separate reference section”, then copied the exam question.

Across all modules, only 6 per cent of the AI submissions were flagged as potentially not being a student’s own work – though in some modules, no AI-generated work was flagged as suspicious. “On average, the AI responses gained higher grades than our real student submissions,” says Scarfe, though there was some variability across modules.

“Current AI tends to struggle with more abstract reasoning and integration into information,” he adds. But across all 63 AI submissions, there was an 83.4 per cent chance that the AI work outscored that of the students.

The researchers claim that their work is the largest and most robust study of its kind to date. Although the study only checked work on the University of Reading’s psychology degree, Scarfe believes it is a concern for the whole academic sector. “I have no reason to think that other subject areas wouldn’t have just the same kind of issue,” he says.

“The results show exactly what I’d expect to see,” says Thomas Lancaster at Imperial College London. “We know that generative AI can produce reasonable sounding responses to simple, constrained textual questions.” He points out that unsupervised assessments including short answers have always been susceptible to cheating.

The workload for academics expected to mark work also doesn’t help their ability to pick up AI fakery. “Time-pressured markers of short answer questions are highly unlikely to raise AI misconduct cases on a whim,” says Lancaster. “I am sure this isn’t the only institution where this is happening.”

Tackling it at source is going to be near-impossible, says Scarfe. So the sector must instead reconsider what it is assessing. “I think it’s going to take the sector as a whole to acknowledge the fact that we’re going to have to be building AI into the assessments we give to our students,” he says.

Topics:

Felecia Phillips Ollie DD (h.c.) is the inspiring leader and founder of The Equality Network LLC (TEN). With a background in coaching, travel, and a career in news, Felecia brings a unique perspective to promoting diversity and inclusion. Holding a Bachelor’s Degree in English/Communications, she is passionate about creating a more inclusive future. From graduating from Mississippi Valley State University to leading initiatives like the Washington State Department of Ecology’s Equal Employment Opportunity Program, Felecia is dedicated to making a positive impact. Join her journey on our blog as she shares insights and leads the charge for equity through The Equality Network.